December Days 02025 #15: Chalk Mark

Dec. 15th, 2025 11:46 pm15: Chalk Mark

Comments to earlier entries in the series, and many of the other times that I talk about my (lack of) technical skills or l33t coding ability, and with regard to cooking by recipe, as well, have pushed back on the still persistent conception I have that recipe following is not doing the thing, and that there is no great skill in executing someone else's code to create something that works (or something delicious.)

Thank you for doing so. I know it is a weasel-thought, and yet I have trouble keeping it away from myself. I cannot see what it looks like from the outside, only from the inside. I know all the things that I have at my disposal, and I have used them enough that they no longer appear to be special to me.

A regular part of my job is troubleshooting. Most of it is what I would consider the simple stuff, where I have seen the error message sufficient numbers of times to know what the likely process should be to fix the problem, or it's clear that someone has gone astray from the established process and needs to be guided back to the way that will work, or to be taught the thing that they actually want, instead of the thing they said they wanted, when it becomes clear the thing they said they wanted was not actually what they wanted. As I have said before, a large amount of the training I have as an information professional is not extensive knowledge of the specifics of any one implementation, but a good dose of the general concepts behind them, and a confidence that when encountering a specific situation, that general knowledge will be enough to get to a specific solution. Or at least enough key phrases to toss into a search engine and read a good candidate page for the specifics of how to get something done. It makes me seem like I know much more about what I'm doing than I actually do. And knowing that there's the undo command available in most places means experimentation is much more possible than if it were not. I still sometimes have to work through people's anxiety or anger about the machine and what it will do to their material, but for the most part, I can get people to click and/or type in the places I would like them to so they get the desired result that we're both looking for.

If I can't actually succeed at getting something to work, I try to send along as detailed of bug reports as I can when there are inevitably tickets filed for things that are out of my control or I need to call in the people with the specialized skill set and knowledge base to fix things. (Learning how to file a good ticket is something I wish they taught everyone who works in libraries, and plenty of other places, too. It makes everyone's job easier when they have a handle of what the issue is, or when there's information in error messages being conveyed to help zero in on the problem.)

However, because I can manage to obtain and wield knowledge at an quick rate for helping people, I've also developed a little bit of a reputation for being good with machines, or manifesting beneficial supernatural auras around them, or being able to work through what the problem is that we're facing and find a solution to it. So I sometimes get or find on my own some of the more esoteric issues that show up. And sometimes I get to laugh my ass off when the solution presents itself. Observe:

The problem: Someone couldn't get to Google after signing in to the library's computer. That's not usually a thing, because, well, Google. So I observe the attempt and get to read the error message.

The error message: "Tunnel connection failed."

Hrm. While I'm not an expert in networking, running a quick search on that error message has the results come back and suggest there's something gone wrong with a proxy of some sort. Let's see if we can figure out what's going on here.

- First check: we're not having a widespread network outage. Other computers are still going fine, so that's not the case.

- Second check: Yep, all the cabling is plugged in at both ends, so that's not it.

- Third check: Do websites other than Google load? Yes, they do, so the problem is not that all connections are being denied by whatever the proxy error is, just the one to Google. (Or to Google and some unknown number of other websites.)

- Fourth check: Is it just this machine that's having trouble getting to Google?

I grab the next public computer over, and check the following:- Can I get to Google if I use the secret superuser login? Yes.

- Can I get to Google using my own library card and selecting the "unfiltered" Internet access option? Yes.

- Can I get to Google using my own library card and selecting the "filtered" Internet access option?

Nope! And the error message that I get back matches the error message I first saw when I started investigating.

We have a winner! Now I have an idea of what happened, and what the proxy server was that caused the problem.

So I ask what setting the user chose when logging in. The user confirms to me that they chose the "filtered" option when logging in. So I had to explain that to get to Google at this particular moment in time, they'd have to log out and choose the other option from whatever they chose this time around. The user might have been embarrassed about this happening to them. I wonder if they thought that engaging the filters would make them less likely to receive advertisements or spam or other kinds of things like that, and especially on topics they might not be interested in. Sadly, that's not the case, and while I have lobbied regularly to have proper extensions installed on the public machines that will do most of that malvertising and ad-blocking as a default, IT has not yet seen fit to include it in their deployment. (And they also have settled on Edge and Chrome as the browsers we offer, and Chrome nerfed the effective ad-blockers earlier this year because Alphabet is fundamentally an ad company that has some other software tools they offer.)

[Diversion: I don't particularly like filtering software. I think it causes more problems than it solves, and frankly, I would rather we didn't have to deal with it at all, but Congress, in their lack of wisdom, decided to tie federal e-rate discounts and funding to ensuring we have "technology prevention measures" in place to prevent the minors from looking at age-restricted material in the Children's Internet Protection Act (CIPA). CIPA should qualify as a four-letter word in my profession. So, to actually provide services for our users at a rate that will not be disastrous, we have to implement the filters, since that's the easiest way of ensuring compliance with the law.

The other problem I have with filters is that they tend to be things created with the idea of a parent that wants no information about the world outside to make it to their child's computer as their primary customer and who they set the defaults for. This almost always results in over-filtering, because the defaults are tuned to the parent that wants no pornography, and also no sexualities other than straight, and no gender identities other than cis, and no way of communicating with the outside world, and so forth. And the people most affected by this, our kids and teenagers, are the ones who are least likely to tell a library staff person, "Hey, this site is informative and not explicit, and yet you have blocked it with your filters. Please unblock it." Because that creates the possibility of a paper trail. The kids are more likely to find some method of circumventing the filters entirely rather than asking for them to be more appropriately tuned.]

I am not trying to show that I am having a right and proper laugh that our filtering software is now blocking Google, even on Google's own browser, because that could be interpreted as laughing at the plight or embarrassment of the user, and that's not acceptable behavior. But I do go and file a ticket about the fact that the filters are apparently now blocking Google, and we should probably fix that, since our landing page for public machines points at GMail as one of its major outbound links. Turns out things were going rather haywire with the filters in their entirety, and the whole thing needed to be wrestled back into the intended effects instead of what had happened to all of us, according to the ticket update. I can imagine how many other users were particularly nonplussed about this as well. And I wonder how many of our under-17 users, the ones who have filters automatically chosen for them, had a time with filters gone off the rails.

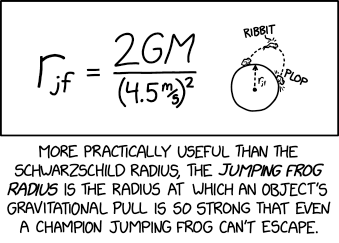

At the end of the story, even at the time it was happening to me, I also must once again grudgingly admit that I am a computer toucher who sometimes can solve problems as if I had magic. This is because of long experience in knowing where to put the chalk mark so that someone else can wallop it with a mallet later. (As the joke goes, an engineer is called in to fix a piece of malfunctioning machinery. He examines it, draws an X on a particular part of the machine, and then smacks it, bringing the machine back to full functionality. Later, the company receives a bill for $5000, an absurd amount of money, and demands the engineer itemize the expenses. He does so: "Chalk: $1. Knowing where to put it: $4,999.")

To drive the point home that week, a few days later, I had another instance of supposed computer magic. Someone was having trouble finding a thing they were sure they had saved to a personal OneDrive account they had signed into.

I could see the save on the local storage of the computer, and the folders that were on the signed-in OneDrive, but the file on the signed-in drive was not present.

- Check one: "Would you save the file again, so I can see what's happening?"

After watching them go through the process of how they were saving, I realized that the shortcut in the saving menu, despite saying "OneDrive," and Microsoft Word assuring the user they were signed into OneDrive correctly, was diverting itself to the OneDrive that would be associated with the Windows account on the computer itself. Instead of the signed-into personal OneDrive, the "OneDrive" shortcut in Word was for our Windows account used to sign in to the machine and run the program for user control through library cards and guest passes.

Cue massive eyeroll from me, and perhaps a choice comment about how computers are remarkably stupid, because they do what we tell them to do, and sometimes because they make assumptions and have defaults that are not correct. If this weren't in a user-facing context, I might have peppered my response with a few four-letter words of my own.

Now that I had an idea of what was going on, I could explaining what was happening to the user, and from there, assist them through the save menus to get to the correct and proper OneDrive folder. Lo, and behold, the file promptly appeared after Word had been told where the correct path to save to. We made sure that the recently-saved document could be opened again, with the changes properly inserted, and, with the remaining time available to the session (I didn't mention it until now, but this was working under time pressure, both because an assignment was due and because the library computers were about to shut doen and restart, no time extensions possible.), figured out how to get a different document properly into edit mode so it could be then changed, saved, and uploaded for an assignment. The second upload happened with about 90 seconds left on the computer session, so you can probably also append a certain amount of "does excellent computer touching and calm instruction under pressure" to my skill list. (There have been more than a few times where I'm being called in at the last minute or something close to it and I have to manage to both create the save and get it off the local machine into something more permanent before the session expires. This is not fun, but I have several successes at this, including directing people through the process while they're panicking about losing all their work.)

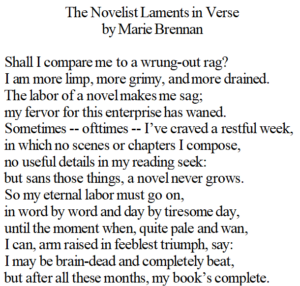

I think of these things as something that any information worker could do, if they had the same knowledge base as I do to draw from. I may be faster at it, and possibly able to detect and error correct from a wider range of possibilities due to my experience at what commonly shows up in these situations, but, as with most of the things that I do and get paid for, I maintain that it is not rocket science, computer science, or magic. And, because it's not something like having to learn to program in a language, or to diagnose and fix things like the workings of a passenger vehicle, or to do whatever the hell it is that Chocolate Guy is up to right now, all of which seem to require a specialized body of knowledge and a large experience base, I think of it as easier to pick up, comparatively. I suspect a fair number of you, a strong amount of my coworkers, and a great number of my users that I have pulled through a potential panic situation, would strenuously object to the idea of it being "easier," even with me accounting for the amount of practice that I have at making things look easier than they actually are. As I mentioned at the top of the post, I see from backstage, rather than from the audience, and therefore I am very likely to need irrefutable proof that "no…no—no, that is not the kind of thing that anyone can just pull out of their hat on a moment's notice!" Supposedly, a grandparent on one side was reputed to have the lack of skill at cooking to burn water, so the ability to follow recipe is a significant improvement there.

And while I'm bashing my head against a computer problem for a game at this point and feeling very foolish about my inability to explain to a computer what's intuitive to me as a human, I have to remember that everything that I've accomplished so far is still pretty cool, even if it's not optimized, golfed, or doing things the "right" way all the time.

(It's a real pain in the ass, and the people who have been helping me with other problems freely admit it's a pain in the ass, because it's trying to do something with incomplete and possibly fuzzy information. I have to figure out how to get a computer to perform a sum of the values at particular indices of an array, and then, when that solution inevitably turns out to be wrong, to move one of the indicies up or down one and run the sum again, and if that doesn't work, to do it again until the correct sum is reached. The potential problem space is too large to brute-force efficiently, and there are imprecise hints about where to plant your initial guess and make small adjustments from.

Once I can get the computer to do the adjustments until it reaches a solution, I have to figure out how, when the values of the problem space change due to other actions, to recalculate the sum based on the index pair that I already know is right, because that shouldn't change over the course of an attempted solve, even if the imprecise hints do change, because while the indices of the hints haven't changed, the values those indices refer to have, and so the correct solution has changed as well.)

So we all have our strengths and weaknesses, and our specialized body of knowledge to apply to any given situation. I will marvel at your skills from the audience, while I shrug at my own, since I see and use them so much. I see chalk marks as the thing I'm doing, and the thing that people ascribe value to, and not necessarily knowing where to put them.